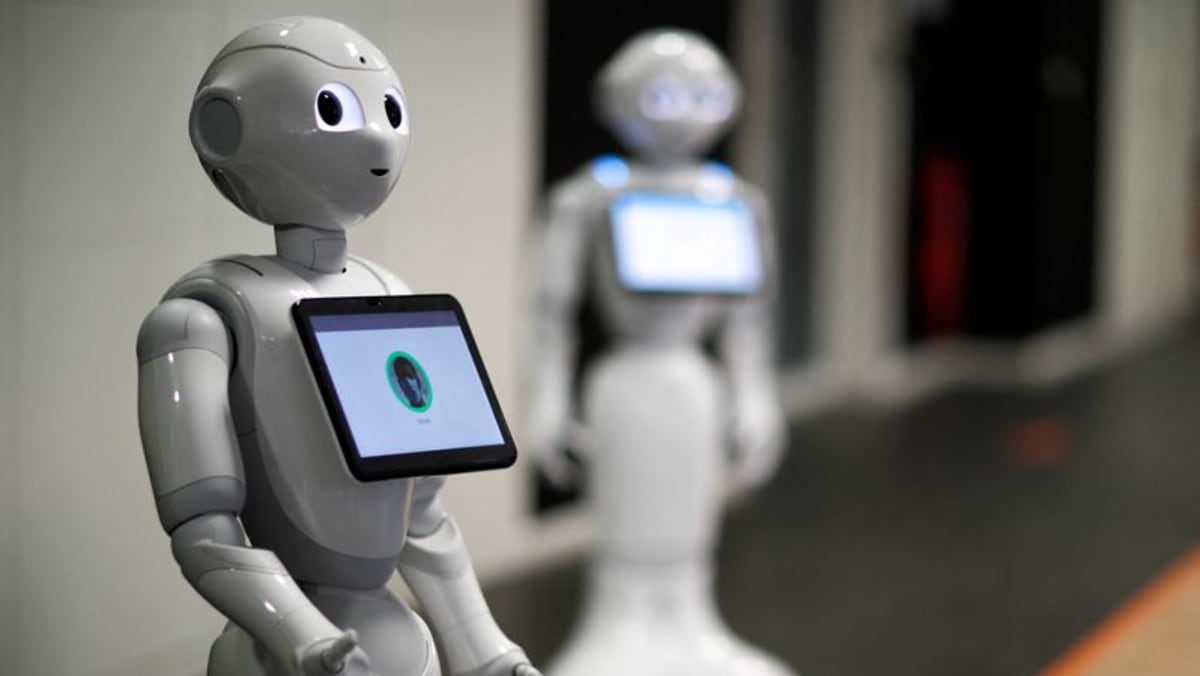

Commentary: AI does not mean the robots are coming

Billions of dollars in venture capital are pouring into robotics start-ups. They aim to apply the same kind of model training techniques that let computers forecast how a protein will fold or generate startling realistic text.

They aim, first, to let robots understand what they see in the physical world, and second, to interact with it naturally, solving the huge programming task embodied in as simple an action as picking up and manipulating an object.

Such is the dream. The latest round of investors and entrepreneurs, however, are likely to end up just as disappointed as those who backed Pepper.

That is not because AI is not useful. Rather, it is because the obstacles to making an economically viable robot that can cook dinner and clean the toilets are a matter of hardware, not just software, and AI does not in itself address, let alone resolve them.

PHYSICAL CHALLENGES

These physical challenges are many and difficult. For example, a human arm or leg is moved by muscles, whereas a robotic limb must be actuated by motors. Each axis of motion through which the limb must move requires more motors.

All of this is doable, as the robotic arms in factories demonstrate, but the high-performance motors, gears and transmissions involved create bulk, cost, power requirements and multiple components that can and will break down.

After creating the desired motion, there is the challenge of sensing and feedback. If you pick up a piece of fruit, for example, then the human nerves in your hand will tell you how soft it feels and how hard you can afford to squeeze it. You can taste whether food is cooked and smell whether it is burning.

None of those senses is easy to provide for a robot, and to the extent they are possible, they add more cost. Machine vision and AI may compensate, by observing whether the fruit is squashed or the food in the pan has gone the right colour, but they are an imperfect substitute.

Source: CNA