Intel’s Next PC Chip, Meteor Lake, Will Speed Up AI Later This Year

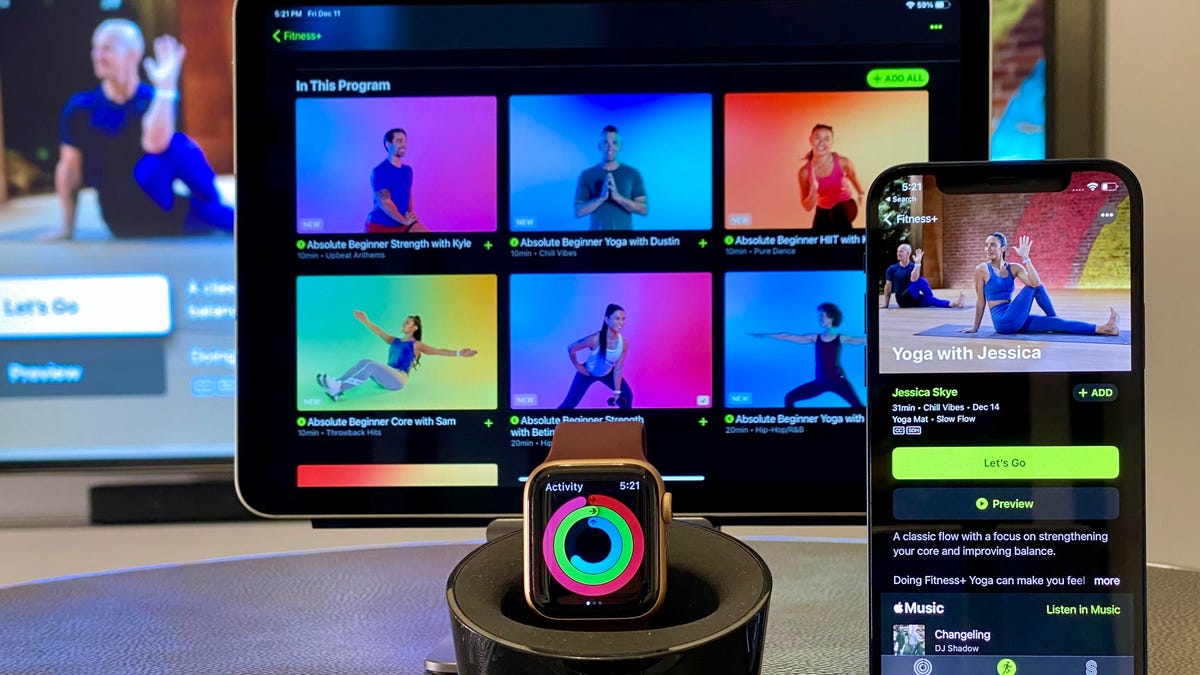

Today’s most glamorous, attention-getting AI tools — OpenAI’s ChatGPT, Microsoft’s Bing, Google’s Bard and Adobe’s Photoshop, for example — run in data centers stuffed with powerful, expensive servers. But Intel on Monday revealed details of its forthcoming Meteor Lake PC processor that could help your laptop play more of a part in the artificial intelligence revolution.

Meteor Lake, scheduled to ship in computers later this year, includes circuitry that accelerates some AI tasks that otherwise might sap your battery. For example, it can improve AI that recognizes you to blur or replace backgrounds better during videoconferences, said John Rayfield, leader of Intel’s client AI work.

AI models use methods inspired by human brains to recognize patterns in complex, real-world data. By running AI on a laptop or phone processor instead of in the cloud, you can get benefits like better privacy and security as well as a snappier response since you don’t have network delays.

What’s unclear is how much AI work will really move from the cloud to PCs. Some software, like Adobe Photoshop and Lightroom, use AI extensively for finding people, skies and other subject matter in photos and many other image editing tasks. Apps can recognize your voice and transcribe it into text. Microsoft is building an AI chatbot called Windows Copilot straight into its operating system. But most computing work today exercises more traditional parts of a processor, its central processing unit (CPU) and graphics processing unit (GPU) cores.

There’s a build-it-and-they-will come possibility. Adding AI acceleration directly into the chip, as has already happened with smartphone processors and Apple M-series Mac processors, could encourage developers to write more software drawing on AI abilities.

GPUs are already pretty good for accelerating AI, though, and developers don’t have to wait for millions of us to upgrade our Windows PCs to take advantage of it. The GPU offers top AI performance on a PC, but the new AI-specific accelerator is good for low power, Rayfield said. Both can be used simultaneously for top performance, too.

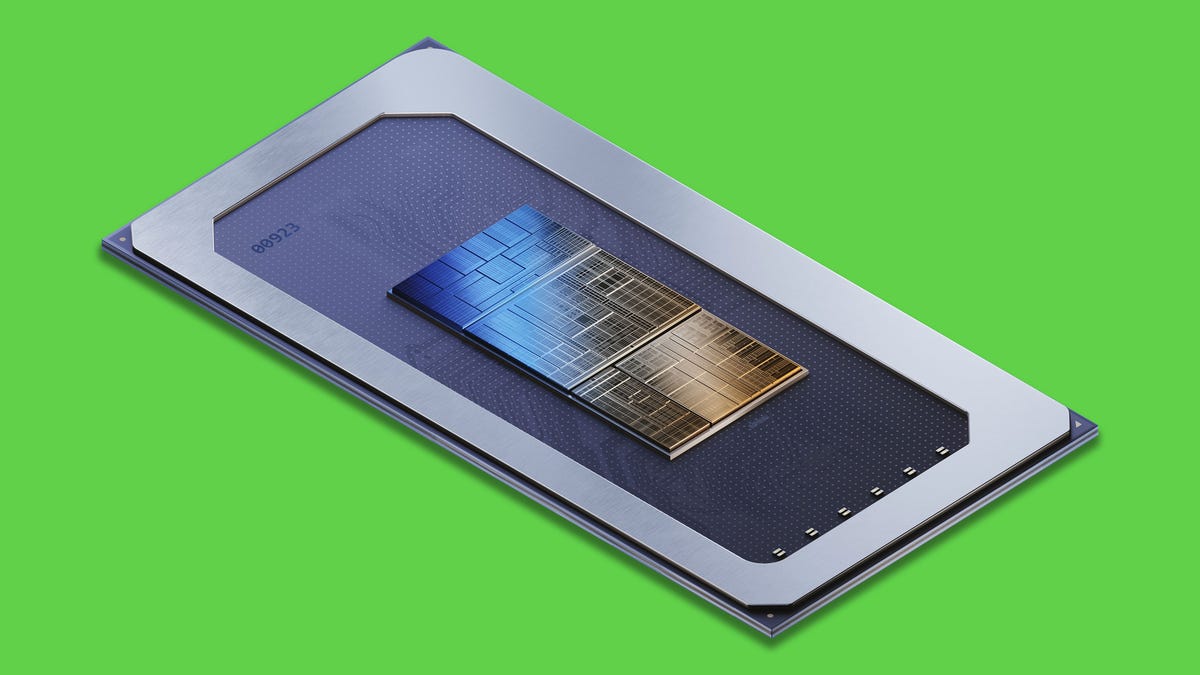

Meteor Lake a key chip for Intel

Meteor Lake is important for other reasons, too. It’s designed for lower power operations, arguably the single biggest competitive weakness compared with the Apple M-series processors. It’s got upgraded graphics acceleration, which is critical for gaming and important for some AI tasks, too.

The processor also is key to Intel’s yearslong turnaround effort. It’s the first big chip to be built with Intel 4, a new manufacturing process essential to catching up with chipmaking leaders Taiwan Semiconductor Manufacturing Co. (TSMC) and Samsung. And it employs new advanced manufacturing technology called Foveros that lets Intel stack multiple “chiplets” more flexibly and economically into a single more powerful processor.

Chipmakers are racing to tap into the AI revolution, few as successfully as Nvidia, which earlier in May reported a blowout quarter thanks to exploding demand for its highest-end AI chips. Intel sells data center AI chips, too, but has more of a focus on economy than performance.

In its PC processors, Intel calls its AI accelerator a vision processing unit, or VPU, a product family and name that stems from its 2016 acquisition of AI chipmaker Movidius.

These days, a variation called generative AI can create realistic imagery and human-sounding text. Although Meteor Lake can run one such image generator, Stable Diffusion, large AI language models like ChatGPT simply don’t fit on a laptop.

There’s a lot of work to change that, though. Facebook’s LLaMA and Google’s PaLM 2 both are large language models designed to scale down to smaller “client” devices like PCs and even phones with much less memory.

“AI in the cloud … has challenges with latency, privacy, security, and it’s fundamentally expensive,” Rayfield said. “Over time, as we can improve compute efficiency, more of this is migrating to the client.”

Source: CNET